Which Cloud is the Best for Databricks: Azure, AWS, or GCP?

You want the best cloud platform choice for your data projects, but Databricks Azure AWS GCP all deliver a unified data analytics platform with a similar workspace experience. You will see consistent performance whether you use Azure, AWS, or GCP. Your decision should reflect your current data needs, scalable infrastructure requirements, and preferred storage solutions. If you value cost-effective data services, each cloud offers unique advantages. Databricks integrates deeply with every major cloud, so your priorities will determine the right fit.

Image Source: pexels

When you compare databricks azure aws gcp, you will notice that the core analytics experience remains consistent across all three cloud providers. However, you should focus on several key factors that can influence your decision. These factors help you understand why one cloud might suit your analytics needs better than another.

Tip: Choosing the right cloud platforms for databricks depends on your organization’s priorities and existing infrastructure.

Here is a table that highlights the main criteria for comparison:

| Category | Azure | AWS | GCP |

|---|---|---|---|

| Ease of Integration | Excels in integration with Microsoft services | Broad compatibility | Emphasizes open-source support |

| Performance and Scalability | Competitive performance with integrated ecosystem | Leads in scalability due to extensive infrastructure | Often leads in scalability |

| Pricing Models | Pay-as-you-go with varying tiers | On-demand pricing based on resources consumed | Flexible pricing structures |

| Storage Solutions | Integrates with Azure Blob and Data Lake | Uses Amazon S3 for unmatched scalability | Google Cloud Storage as main solution |

| Identity Management | Azure Active Directory for access control | AWS IAM for robust access control | Google Cloud IAM for centralized control |

You should also consider these important criteria:

Deployment model: Do you need public, private, or hybrid options?

Pricing model: Do you prefer pay-as-you-go or subscription?

Data types supported: Does the platform support your analytics and storage needs?

Machine learning capabilities: Does the cloud offer built-in AI and analytics tools?

Cost management is essential. Databricks offers scalable, usage-dependent pricing models, giving you control over expenses. You must also evaluate security, as databricks provides enterprise-grade features like fine-grained access controls and encryption to protect your data.

You will find that databricks azure aws gcp deliver a unified workspace for analytics. Each cloud offers a familiar interface, so your teams can collaborate easily. The main differences come from how each cloud integrates with its own services and manages scalability.

Azure provides seamless access to Microsoft analytics tools and identity management.

AWS stands out for its broad analytics ecosystem and unmatched scalability.

GCP emphasizes open-source analytics and flexible infrastructure.

When you choose among these cloud providers, you should ask:

Do you need user-level access controls for your analytics projects?

Will your analytics platform handle regulated or sensitive data?

Is cost predictability more important than advanced scalability or security?

Your answers will guide you to the best databricks azure aws gcp option for your analytics workloads.

You gain a strong advantage when you use databricks with azure because of its deep connection to native services. This integration lets you move data quickly, manage security, and streamline analytics. You can connect to azure data sources and control access with familiar tools. The table below shows how databricks links with key azure services:

| Azure Service | Integration Details |

|---|---|

| Azure Data Lake | The native connector supports multiple access methods and simplifies security using Azure AD identity. |

| Azure Synapse | High-performance connector enables fast data transfer and supports streaming data. |

| Azure Active Directory | Ensures that only authorized users can access the data and analytics environment. |

You also benefit from advanced security and compliance features. These include just-in-time user provisioning, private connectivity, and customer-managed keys. The table below highlights important security features:

| Feature | Description |

|---|---|

| Authentication and access control overview | Overview of authentication methods and access control mechanisms in Azure Databricks. |

| Automatically provision users (JIT) | Enable just-in-time user provisioning to automatically create user accounts during SSO login. |

| Data security and encryption overview | Overview of encryption options and data protection features in Azure Databricks. |

| Azure Compliance Certifications | Azure holds industry-leading compliance certifications, ensuring Azure Databricks workloads meet regulatory standards. |

You should choose azure integration if your organization relies on Microsoft services, needs strict compliance, or wants seamless access to enterprise security tools.

When you use databricks with aws, you unlock broad compatibility with many cloud services. You can connect to Amazon S3 by launching a databricks cluster with an S3 IAM role. This process involves selecting or creating a cluster, navigating to advanced options, and choosing the right IAM role. You also gain access to aws services like Redshift and IAM for secure data management.

The table below outlines the benefits of using databricks with aws security and data management services:

| Benefit | Description |

|---|---|

| Data Discovery | Provides a unified view of all data assets, enabling quick search and categorization of datasets. |

| Data Governance | Centralizes access controls and auditing, ensuring compliance with regulations like GDPR and CCPA. |

| Data Lineage | Tracks data flow, providing transparency for troubleshooting and optimizing data workflows. |

| Data Sharing and Access | Allows fine-grained access controls, facilitating secure data sharing within and outside the organization. |

| Enhanced Security | Centralizes policy management to enforce consistent security policies, reducing unauthorized access risks. |

You should consider aws integration if you need scalable storage, advanced governance, or want to leverage a wide range of analytics services.

You can connect databricks to gcp services by enabling the BigQuery Storage API, creating a service account, and setting up a Cloud Storage bucket. This setup allows you to access BigQuery, Google Cloud Storage, and IAM for secure workflows. Databricks improves performance by pushing down query filters to BigQuery, which reduces data transfer and speeds up analytics.

You benefit from gcp integration in several scenarios:

Secure workflows use service accounts for automatic authentication.

Automated data transfer moves large datasets between gcp and databricks without manual credentials.

Scalability lets you handle growing workloads with ease.

You should choose gcp integration if your projects require advanced AI, seamless data movement, or you want to use open-source analytics services.

Image Source: pexels

You need to consider performance and scalability when selecting a cloud for Databricks. Fast cluster startup and job completion can help you deliver data projects on time and within budget. Here is what you can expect for Databricks on each cloud:

SQL warehouses on Databricks start in 10-30 seconds.

Cluster startup usually takes 2-5+ minutes, depending on your configuration.

Serverless pools can reduce initial latency, but you may face some limitations.

You should also look at resource availability and scalability. Each cloud offers unique strengths for Databricks workloads. The table below highlights key differences:

| Cloud Provider | Key Strengths | Notable Features |

|---|---|---|

| AWS | Maturity and scale, largest market share | EMR (Elastic MapReduce) for big data processing |

| Azure | Strong in enterprise integration, hybrid cloud strategy | Databricks, HDInsight, Synapse Analytics |

| GCP | Focus on data analytics and machine learning | Dataproc, Dataflow, BigQuery |

You want to choose a cloud that matches your data needs and supports your performance and scalability goals. This decision can impact your ability to handle large data volumes and complex analytics.

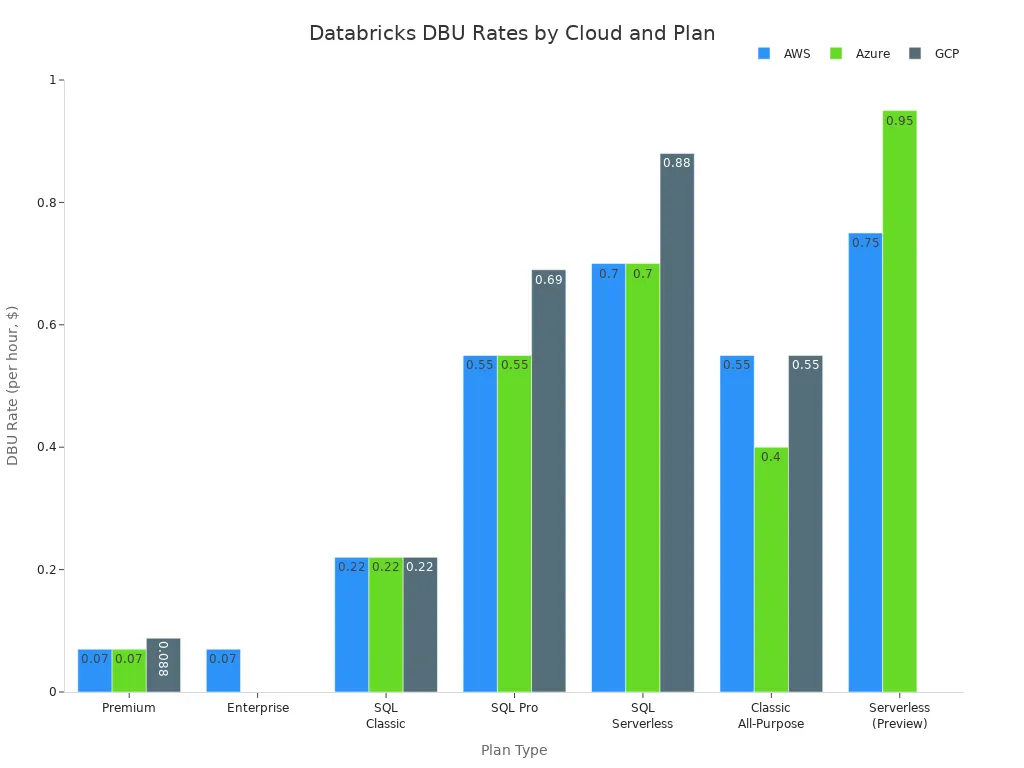

Pricing considerations play a major role in your cloud selection. You need to understand how each provider structures Databricks pricing and what options exist for cost optimization. The table below compares Databricks pricing across AWS, Azure, and GCP:

| Cloud Provider | Plan Type | DBU Rate (per hour) |

|---|---|---|

| AWS | Premium | $0.070 |

| Azure | Premium | $0.07 |

| GCP | Premium | $0.088 |

| AWS | Enterprise | $0.07 |

| Azure | Enterprise | - |

| GCP | Enterprise | - |

| AWS | SQL Classic | $0.22 |

| Azure | SQL Classic | $0.22 |

| GCP | SQL Classic | $0.22 |

| AWS | SQL Pro | $0.55 |

| Azure | SQL Pro | $0.55 |

| GCP | SQL Pro | $0.69 |

| AWS | SQL Serverless | $0.70 |

| Azure | SQL Serverless | $0.70 |

| GCP | SQL Serverless | $0.88 |

| AWS | Classic All-Purpose | $0.55 |

| Azure | Classic All-Purpose | $0.40 |

| GCP | Classic All-Purpose | $0.55 |

| AWS | Serverless (Preview) | $0.75 |

| Azure | Serverless (Preview) | $0.95 |

| GCP | Serverless (Preview) | - |

You should review these pricing models to find the best fit for your budget and workload. Each cloud offers different cost optimization features, such as reserved instances or serverless options. You can lower your cost by matching your usage patterns to the right plan. Transparent pricing helps you plan and control your data expenses. When you focus on performance and scalability, along with pricing considerations, you set your Databricks projects up for success.

You should choose databricks on aws when your organization handles massive data workloads or requires flexible scaling. This cloud stands out because it delivers seamless integration with core aws services. You can connect databricks on aws directly to S3, Redshift, and AWS Glue, which streamlines your data management and analytics pipelines. The platform runs on EC2 instances, so you can scale resources up or down automatically. Spot Instances help you save costs during heavy data processing.

| Feature | Description |

|---|---|

| Seamless Integration with AWS | Works effortlessly with AWS services like S3, Redshift, and AWS Glue for data management. |

| Elastic Compute | Runs on EC2 instances that can scale automatically and utilize Spot Instances for cost savings. |

| Delta Lake | A powerful data lake storage solution for managing large datasets with ACID transactions. |

You benefit from databricks on aws because it supports a unified analytics platform built on Apache Spark. This setup lets you handle data engineering, data science, and machine learning in one place. You can streamline your data workflows and boost collaboration across teams. Many organizations use databricks on aws for these reasons:

Seamless data engineering and data science

Collaboration features for team efficiency

Managed infrastructure to reduce operational overhead

Integration with open-source tools for ETL processes

Support for machine learning workflows

Tip: Databricks on aws gives you granular control over EC2 nodes, making it ideal for spikey workloads or diverse analytics needs.

You can leverage Apache Spark for distributed data processing, support ETL operations, and facilitate complex analytics. Databricks on aws also integrates with machine learning libraries, so you can develop and deploy models efficiently. If your data projects demand high throughput, flexible scaling, and deep aws integration, databricks on aws is the best choice.

You should select databricks on azure if your organization operates in regulated industries such as healthcare or finance. This cloud excels at meeting strict compliance requirements and offers advanced security features. Databricks on azure supports a wide range of compliance certifications, which helps you meet industry standards and regulatory obligations.

| Compliance Standard | Link |

|---|---|

| Canada Protected B | Canada Protected B |

| HIPAA | HIPAA |

| HITRUST | HITRUST |

| IRAP | IRAP |

| PCI-DSS | PCI-DSS |

Databricks on azure provides robust compliance capabilities. You can use data anonymization and encryption tools to protect sensitive information. The platform ensures your data remains secure, accessible, and trackable from end to end. Audit logs and trails document every access and change, giving you proof of compliance. Governance tools offer transparency and control, while real-time analytics let you catch and fix discrepancies quickly.

| Feature | Description |

|---|---|

| Compliance Capabilities | Supports HIPAA and GDPR compliance through data anonymization and encryption tools. |

| Data Security | Ensures data is secure, accessible, and trackable from end to end. |

| Audit Capabilities | Logs and trails document every access and change, providing proof of compliance. |

| Governance Tools | Offers access logs, change history, and controls for transparency and auditability. |

| Real-time Analytics | Allows teams to catch and fix discrepancies on the spot, enhancing data accuracy and reliability. |

You gain additional value from databricks on azure through its integration with Microsoft services. You can build data platforms, enable real-time streaming, and support business intelligence reporting. The platform connects with Azure Data Factory, Event Hub, Power BI, Azure Key Vault, and Azure ML. If your top priority is compliance, security, and seamless integration with Microsoft tools, databricks on azure is the right fit.

You should consider databricks on gcp if your organization focuses on advanced AI and machine learning projects. This cloud offers a unified platform for the entire AI/ML lifecycle, from data ingestion to model monitoring. Databricks on gcp provides dynamic scaling of compute resources, which helps you manage large datasets and complex algorithms efficiently.

| Feature | Description |

|---|---|

| AI Playground | Prototype and test generative AI models with no-code prompt engineering and parameter tuning. |

| Agent Bricks | Simple approach to build and optimize domain-specific, high-quality AI agent systems for common AI use cases. |

| Foundation Models | Serve state-of-the-art LLMs including Meta Llama, Anthropic Claude, and OpenAI GPT through secure, scalable APIs. |

| Mosaic AI Agent Framework | Build and deploy production-quality agents including RAG applications and multi-agent systems with Python. |

| MLflow for GenAI | Measure, improve, and monitor quality throughout the GenAI application lifecycle using AI-powered metrics and comprehensive trace observability. |

| Vector Search | Store and query embedding vectors with automatic syncing to your knowledge base for RAG applications. |

| Foundation Model Fine-tuning | Customize foundation models with your own data to optimize performance for specific applications. |

| AutoML | Automatically build high-quality models with minimal code using automated feature engineering and hyperparameter tuning. |

| Databricks Runtime for ML | Pre-configured clusters with TensorFlow, PyTorch, Keras, and GPU support for deep learning development. |

| MLflow tracking | Track experiments, compare model performance, and manage the complete model development lifecycle. |

| Feature engineering | Create, manage, and serve features with automated data pipelines and feature discovery. |

| Databricks notebooks | Collaborative development environment with support for Python, R, Scala, and SQL for ML workflows. |

| Model Serving | Deploy custom models and LLMs as scalable REST endpoints with automatic scaling and GPU support. |

| AI Gateway | Govern and monitor access to generative AI models with usage tracking, payload logging, and security controls. |

| External models | Integrate third-party models hosted outside Databricks with unified governance and monitoring. |

| Foundation model APIs | Access and query state-of-the-art open models hosted by Databricks. |

| Unity Catalog | Govern data, features, models, and functions with unified access control, lineage tracking, and discovery. |

| Lakehouse Monitoring | Monitor data quality, model performance, and prediction drift with automated alerts and root cause analysis. |

| MLflow for Models | Track, evaluate, and monitor generative AI applications throughout the development lifecycle. |

You can use databricks on gcp to streamline your AI/ML workflows. The platform supports popular frameworks like TensorFlow and Scikit-learn, so you can develop and deploy models with ease. MLflow integration helps you manage the entire machine learning process, from experiment tracking to deployment. Unity Catalog ensures data quality and compliance, which is crucial for regulated industries.

Dynamic scaling of compute resources

Support for ML frameworks

MLflow for lifecycle management

Governance with Unity Catalog

Lakehouse architecture for diverse data types

If your organization values innovation in AI, rapid experimentation, and access to advanced machine learning tools, databricks on gcp offers the best environment.

You must choose the cloud for Databricks based on your organization’s priorities. Consider integration, compliance, pricing, and AI/ML capabilities. Each platform offers unique advantages:

| Cloud Platform | Key Advantage |

|---|---|

| AWS | Extensive service range and integrations |

| Azure | Deep Microsoft integration and compliance |

| GCP | Advanced analytics and machine learning |

Before deciding, review your current cloud investments and data strategy. Assess long-term goals by asking:

Does the technology align with your overall strategy?

Can you measure ROI and usability for end users?

All three clouds provide strong options. Your context and goals determine the best fit.

SQLFlash is your AI-powered SQL Optimization Partner.

Based on AI models, we accurately identify SQL performance bottlenecks and optimize query performance, freeing you from the cumbersome SQL tuning process so you can fully focus on developing and implementing business logic.

Join us and experience the power of SQLFlash today!.