DuckDB vs Spark Benchmarking Speed and Efficiency in 2025

Explore the speed and efficiency of DuckDB versus Spark.

| Features | DuckDB | Apache Spark |

|---|---|---|

| Installation Speed | Under 10 seconds | Several minutes |

| Query Execution Speed | Very fast for local jobs | Slower for local jobs |

| Resource Usage | Low CPU and memory | Higher CPU and memory |

| Scalability | Best for single-node jobs | Handles distributed workloads |

| Data Handling | Zero-Copy Workflows | Traditional Data Handling |

| Community Support | Fast-growing community | Established large community |

| Cost Efficiency | Low cost for small jobs | Higher cost for clusters |

| Energy Consumption | Less energy used | More energy consumed |

| Machine Learning Support | Good for analytics | Strong for large ML tasks |

If you want to know which solution is faster and more energy-efficient, comparing dockdb spark scale is key. DuckDB consistently delivers results faster than Spark for most 2025 data jobs. Tests show DuckDB can handle data up to 23 GB on a laptop with 16 GB RAM without slowing down, demonstrating impressive dockdb spark scale capabilities. In contrast, Spark requires more than one computer to manage the same tasks. The differences are clear in the table below:

| Dataset Size | DuckDB Performance | Spark Performance |

|---|---|---|

| 10GB | Much faster | Slower |

| 100GB | Way faster | Slower |

| 200GB | Works well on one computer | Needs many computers |

Overall, when you compare dockdb spark scale, DuckDB is typically faster and more power-efficient.

DuckDB is faster and uses less power for data up to 200GB. This makes it great for checking data on your own computer.

Spark is better for very big data over 1TB. It uses many computers to work faster.

DuckDB is simple to use and does not need much setup. You can start looking at data fast without hard steps.

Spark has many tools for smart data work and machine learning. It is good for big data jobs.

You can use both DuckDB and Spark together. DuckDB is good for fast questions. Spark is good for big data work.

Image Source: unsplash

When you look at DuckDB and Spark, you want to be fair. Most tests use standard ways like TPC-H and mid-size tests. These help you see how each engine works with different data and jobs.

| Methodology | Description | Performance Insights |

|---|---|---|

| TPC-H Benchmarks | Standard tests for checking database speed. | DuckDB does well at all sizes. Spark has trouble with big data. |

| Mid-Scale Tests | Real tests with data from 5 to 200 GB. | DuckDB is better if data fits in RAM. Spark is better with huge data. |

You can trust these tests to show real speed and power use.

You need to use data that is like real work data. Most tests use TPC-H data in Parquet format. These come in many sizes, so you can see how each engine works with small and big jobs.

| Dataset Type | Size | Format |

|---|---|---|

| TPC-H | Changes by scale factor | Parquet |

Many tests begin with a 50 GB dataset.

Each query runs two times: first as a cold run, then as a hot run.

DuckDB does well with all dataset sizes.

The computer you use matters a lot in tests. You want to use machines like the ones you might use at work. Here is a normal setup for these tests:

| Specification | Details |

|---|---|

| CPU model | Intel(R) Xeon(R) CPU E5-2676 v3 @ 2.40 GHz |

| CPU cores | 40 |

| Memory | 160 GB |

| GPU | Not listed |

This setup gives DuckDB and Spark enough power to do their best.

You should pick test cases that are common in real jobs. These include aggregation, joins, and search.

| Operation | Description |

|---|---|

| Aggregation | Jobs like WHERE, GROUP BY, HAVING, MAX, AVG, and more. |

| Join | Joining tables but only picking some columns. |

| Search | Looking for a row using one column ID. |

These cases show how DuckDB and Spark handle the jobs you do often.

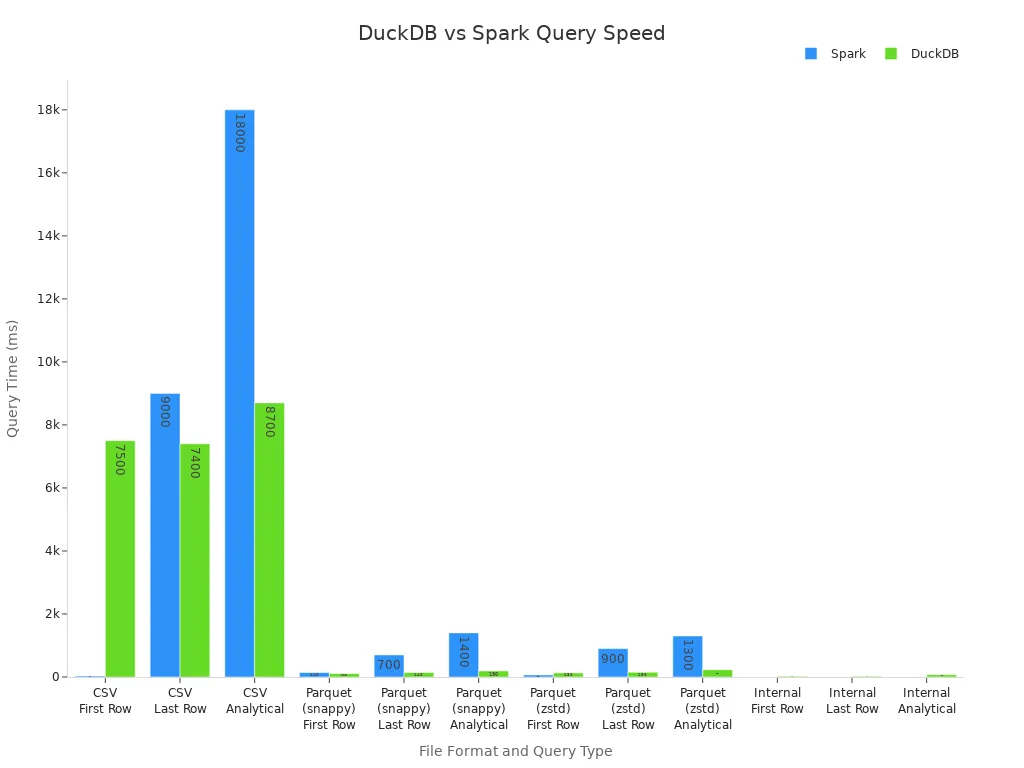

When you work with a 10GB dataset, you want fast answers. DuckDB and Spark both handle this size well, but their speeds change based on file format and query type. You can see the differences in the table below:

| Engine | File format | First Row Lookup | Last Row Lookup | Analytical Query |

|---|---|---|---|---|

| Spark | CSV | 31 ms | 9 s | 18 s |

| DuckDB | CSV | 7.5 s | 7.4 s | 8.7 s |

| Spark | Parquet (snappy) | 140 ms | 700 ms | 1.4 s |

| DuckDB | Parquet (snappy) | 110 ms | 140 ms | 190 ms |

| Spark | Parquet (zstd) | 66 ms | 900 ms | 1.3 s |

| DuckDB | Parquet (zstd) | 130 ms | 150 ms | 230 ms |

| DuckDB | Internal | 23 ms | 22 ms | 75 ms |

Tip: DuckDB shines when you use Parquet files. You get answers in less than a second for most lookups. Spark does better with CSV files for quick searches, but DuckDB leads in analytical queries with Parquet.

As your data grows to 100GB, you need to watch for slowdowns. Both DuckDB and Spark finish big queries in under 10 minutes. Here is a quick look:

| Database | Query Completion Time (seconds) |

|---|---|

| DuckDB | 546 |

| Spark | 505 |

You see that Spark finishes a bit faster at this size. DuckDB stays close, showing strong performance for single-node jobs.

When you reach 1TB, the gap between the two engines grows. Spark uses its distributed power to handle huge data. You can run queries across many machines and finish jobs that would not fit in memory on one computer. DuckDB works best when your data fits in RAM or fast storage. For 1TB, you may see Spark finish jobs that DuckDB cannot complete on a single node. If you need to process massive datasets, Spark gives you the scale you need. DuckDB keeps its speed for jobs that fit local resources.

When you run data jobs, you want to know how much CPU and memory each engine uses. DuckDB works by processing data in small groups. This makes it faster and more efficient. You get answers quickly, and your computer does not get too busy. DuckDB also moves data without making extra copies. This saves memory and helps finish tasks faster.

Spark uses a regular way to process data. It can use more CPU and memory, especially with big datasets. Spark is more complex, so you might need to change more settings and use more resources.

| Feature | DuckDB Performance | Spark Performance |

|---|---|---|

| Execution Model | Vectorized Execution (2-8x faster) | Standard Execution |

| Data Handling | Zero-Copy Workflows | Traditional Data Handling |

| OLAP Speed | 1000x+ speedups via postgres_scan() | Standard OLAP Performance |

| Complexity | 10% of the complexity | Higher Complexity |

Tip: DuckDB is a good choice if you want to use less memory and CPU for most jobs.

Cost is important when you pick a data engine. DuckDB runs on one computer. You do not need to buy extra servers. This keeps costs low for small and medium jobs. You can use your laptop or a simple server.

Spark works best with many computers. You may need to pay for a group of servers or cloud space. This can cost more, especially if you do not have huge datasets. Big companies with lots of data may find Spark’s cost worth it. For most people, DuckDB is cheaper.

Energy use matters for saving money and helping the environment. DuckDB uses less energy because it finishes jobs faster and uses fewer resources. You can run many queries without using much power or heating up your computer. Spark uses more energy, especially with many computers. DuckDB is the greener choice for most jobs.

Note: Picking the right engine helps you save money and energy and get your work done faster.

Image Source: pexels

When you work with data on a single computer, you want speed and simplicity. DuckDB gives you both. You can run queries on your laptop or desktop and get answers quickly. DuckDB is built for single-node jobs. It uses your computer’s memory and CPU very well. You do not need to set up a cluster or manage extra software. This makes your work easier and faster.

DuckDB shines when you handle mid-size analytics. If your data is too big for tools like Excel but not huge, DuckDB is the best choice. You see better speed and efficiency than Spark at this scale. Spark can run on one machine, but it needs more setup and uses more resources. You may wait longer for results. When you compare dockdb spark scale at the local level, DuckDB stands out for quick answers and low resource use.

Tip: If you want to analyze data up to 200GB on your own computer, DuckDB gives you the best mix of speed and ease.

Here is a quick look at how both engines perform on a single node:

| Feature | DuckDB (Local) | Spark (Local) |

|---|---|---|

| Setup Time | Under 10 seconds | Several minutes |

| Query Speed | Very fast | Slower |

| Resource Use | Low | Higher |

| Best Use Case | Mid-size analytics | Learning, small tests |

You can see that dockdb spark scale favors DuckDB when you work locally.

When your data grows beyond what one computer can handle, you need to scale out. Spark was made for this. You can connect many computers together and process huge datasets. Spark lets you run jobs on clusters with hundreds or thousands of nodes. This is the dockdb spark scale advantage for Spark. You can finish tasks that would not fit in memory on a single machine.

DuckDB focuses on single-node performance. It works best with moderate data sizes and simple jobs. If you try to use DuckDB for very large datasets across many computers, you may run into limits. Spark, on the other hand, handles distributed jobs well, but you must manage clusters and deal with network overhead. This can make things more complex.

Here are some points to help you compare dockdb spark scale at the distributed level:

DuckDB is optimized for single-node jobs. You get great speed for aggregations and joins on moderate data.

Spark is built for clusters. You can process petabytes of data, but you need to manage more moving parts.

DuckDB may struggle with very large datasets in a distributed setup.

Spark introduces network overhead and cluster management, which can slow things down if not set up well.

Note: If your job needs to run on many computers, Spark gives you the dockdb spark scale you need. You can process massive datasets, but you must handle more setup and tuning.

Here is a table to help you decide:

| Scale Type | DuckDB Strengths | Spark Strengths |

|---|---|---|

| Local | Fast, simple, low cost | Good for learning, small jobs |

| Distributed | Best for moderate data sizes | Handles huge data, scalable |

When you look at dockdb spark scale, you see that DuckDB leads for local jobs, while Spark wins for big, distributed workloads. You should choose the engine that matches your data size and team needs.

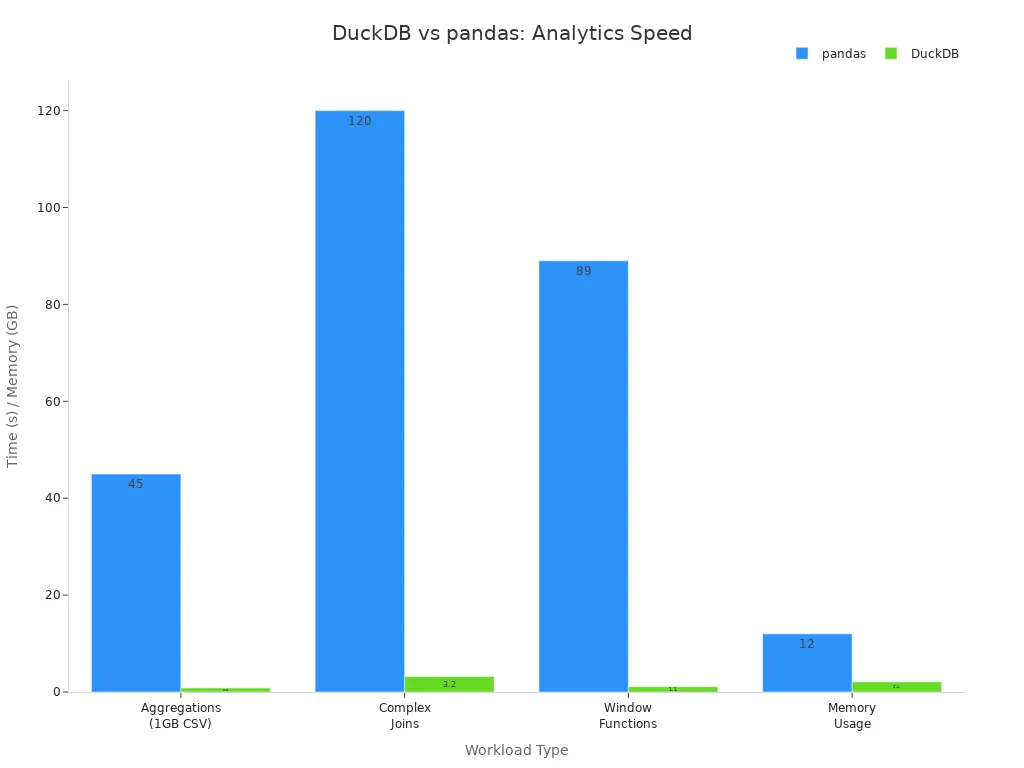

You want your analytics to be fast. DuckDB is much quicker than many engines. When you compare DuckDB to pandas, DuckDB wins by a lot. The table below shows how much faster DuckDB is:

| Workload Type | pandas | DuckDB | Performance Gain |

|---|---|---|---|

| Aggregations (1GB CSV) | 45s | 0.8s | 56x faster |

| Complex Joins | 120s | 3.2s | 37x faster |

| Window Functions | 89s | 1.1s | 81x faster |

| Memory Usage | 12GB | 2.1GB | 83% reduction |

DuckDB does GROUP BY, joins, and window functions very quickly. It uses less memory, so your computer does not slow down. DuckDB reads CSV files at the same time. It also works with Parquet and JSON for even better speed.

Tip: DuckDB helps you get answers fast when you do analytics.

You do not want to spend lots of time setting up tools. DuckDB is easy to use because it is simple. You can install DuckDB in just a few seconds. You can start using SQL right away. Many people say DuckDB makes their code easier and saves time. The table below shows why DuckDB is simple:

| Description | Source |

|---|---|

| Embeddable and small, easy to add to apps | DuckDB - a primer |

| Less code and faster execution for analytics | DuckDB + Evidence.dev for Analyzing VC Data |

| Modern SQL syntax for efficient data handling | DuckDB + Evidence.dev for Analyzing VC Data |

| Frequent updates improve daily usability | DuckDB + DuckLake: Simplifying Lakehouse Workflows for Data Buyers |

You can spend more time on your analysis and less on setup.

You want your database to work everywhere. DuckDB runs on your laptop, desktop, or server. You do not need special computers or clusters. DuckDB works on Windows, macOS, and Linux. You can move your data and queries to different systems easily. DuckDB lets you analyze data anywhere. Other engines may need more setup for big jobs.

You want your data engine to grow with you. Spark can use one computer or thousands. You can work with huge datasets and not slow down. Spark uses distributed computing. This means you split jobs across many machines. Big jobs finish faster with Spark.

Here is how Spark does in real tests:

| Benchmark | Ampere Altra Max Performance | Intel Ice Lake Performance | Performance Advantage |

|---|---|---|---|

| TeraSort Throughput | Higher by 18% | Baseline | 18% |

| TPC-DS Query Time | 21% faster for 3TB dataset | Baseline | 21% |

Spark gets faster when you add more computers. DuckDB works best on one computer. Spark handles petabytes of data with clusters. If your data grows, Spark can keep up.

Tip: Pick Spark if you need to process very large datasets or run jobs on many computers.

You want tools that work well together. Spark has a big ecosystem. You get lots of libraries and connections for different jobs. This makes your work easier and quicker.

The Spark ecosystem gets new libraries and tools every year.

You join a community that helps with Spark problems.

Spark gets updates often to stay current.

Many software and services work with Spark. You can choose what fits your needs by reviews, price, and features.

DuckDB is simple and portable. Spark gives you more choices for advanced analytics and connections.

You want to build smart tools. Spark helps you train machine learning models on huge datasets. You can start on your laptop and move to a cluster as your data grows. Spark supports Python, Scala, and R. You use built-in libraries for tasks like classification, regression, and clustering.

DuckDB is good for analytics. Spark gives you more options for machine learning with big data. You can run tests, tune models, and use solutions for large projects.

Spark is a good choice for scalable machine learning and advanced analytics in big companies.

Setting up a new data engine can be important. DuckDB is easy to install. You only need one command to get it. DuckDB works as a precompiled binary. You do not need extra software. The base image for DuckDB is small. It is just 216MB if you use Python. You can start using DuckDB in seconds.

Spark needs more work to set up. You must have Python and Java. Installing Spark has more steps. It needs more dependencies. The PySpark image is much bigger. It is about 987MB when not compressed. Many people use containers for Spark. This makes setup more complex.

DuckDB: Easy install, few dependencies, small size.

Spark: Needs Python and Java, bigger install, harder setup.

DuckDB lets you start fast. It is easier for quick setup.

You want your data tools to be simple to manage. DuckDB keeps things easy. It works as an embedded library. You do not need to manage clusters. You do not worry about network problems. This design means less work for you. You can deploy DuckDB easily. It keeps running with little effort.

Spark uses a distributed system. You must manage clusters and watch nodes. You also handle network delays. This setup makes maintenance harder. It gets tougher as your data grows.

DuckDB: Simple to deploy, fewer parts, less work.

Spark: Needs cluster management, more upkeep.

DuckDB saves you time on maintenance for most users.

A good community helps you learn and fix problems. DuckDB’s user group grew fast in 2025. Many people use DuckDB for analytics now. There are lots of talks about its speed and new features. Users compare DuckDB and Spark often. This shows people are interested and active.

Spark has a big, old community. You find many guides and forums for Spark. Both groups are active. DuckDB’s growth brings new ideas and support.

DuckDB: Fast-growing, active, focused on speed.

Spark: Big, old, lots of help.

Both tools have active communities. DuckDB’s growth brings fresh support and ideas.

Pick DuckDB if you want fast and easy data work. It is best for small or medium datasets. DuckDB is great for quick questions and local projects. You do not need to set up servers or clusters. You can run queries on your laptop or desktop. DuckDB installs fast and does not use much memory. This saves you time.

You get quick answers when testing ideas.

DuckDB works well for interactive analytics.

You skip hard setup and server work.

DuckDB is much faster than Spark for medium data.

Tip: If you want to look at data without extra gear or long setup, DuckDB is simple and strong.

Choose Spark when your data is too big for one computer. Spark is good for huge datasets, streaming, and jobs that need many computers. Spark helps you with hard ETL jobs and machine learning across lots of machines.

| Workload Type | Description |

|---|---|

| Datasets larger than single machine memory | Use Spark for data over 100GB. |

| Streaming data processing requirements | Spark handles real-time data streams. |

| Distributed feature engineering | Spark processes features across clusters. |

| Complex ETL pipelines combined with ML | Spark manages intricate data transformation and machine learning. |

Spark lets you work with very big data and do advanced analytics.

You can use both DuckDB and Spark together. Use Spark for big data jobs and to keep things safe if something fails. Use DuckDB when you need fast answers. This way, you save resources and work faster. Run big jobs with Spark and use DuckDB for quick checks or local work.

Hybrid setups help you with both big and small tasks. Be careful with memory when Spark does row-by-row jobs. Sometimes, this can make Spark run out of memory if the job is hard.

Using DuckDB and Spark together gives you speed, scale, and efficiency. You can handle any data job this way.

You have to pick the right engine for your data work. DuckDB is good for small and medium datasets. It gives fast answers and is easy to set up. Spark is better for bigger jobs. It can use many computers at once.

Key takeaways:

DuckDB is faster for interactive queries and medium data.

Spark is better for large, distributed workloads and fault tolerance.

DuckDB deploys quickly and runs in notebooks.

Spark supports advanced analytics and machine learning.

In 2025, choose DuckDB if you want speed and simple setup. Pick Spark when you need to handle lots of data and want strong reliability.

SQLFlash is your AI-powered SQL Optimization Partner.

Based on AI models, we accurately identify SQL performance bottlenecks and optimize query performance, freeing you from the cumbersome SQL tuning process so you can fully focus on developing and implementing business logic.

Join us and experience the power of SQLFlash today!.