AI SQL Optimization in 2026: Practical Guide for Engineers

If you write queries all day but only tune them when something breaks, you’re not alone. Many developers can express business logic in SQL yet hesitate when a plan goes sideways, a join explodes, or a predicate blocks index use. Meanwhile, AI promises faster optimization—but what does it really deliver in 2026? This guide separates signal from noise, outlines a disciplined workflow, and shows how to combine engine-native tools with AI assistance for safe, measurable gains.

AI models and assistants are excellent at pattern-driven refactoring and explanation. They can rewrite non‑SARGable predicates, replace correlated subqueries with set-based joins, convert dialects, and summarize execution plans so a developer can act faster. Microsoft’s engineering guidance on AI-based T‑SQL refactoring explicitly frames these capabilities as guardrail-driven, previewable changes that still require human acceptance and validation; see the Azure SQL blog on AI‑based T‑SQL refactoring (2025).

Here’s the catch: large language models are not cost-based optimizers. They don’t have your instance statistics, histograms, or workload history. Peer‑reviewed surveys and studies from 2024–2025 note gaps such as lacking cost models, difficulty generalizing across complex plans, and inference overhead that can outweigh benefits. A representative review is “Can Large Language Models Be Query Optimizers?” (ACM, 2025). The takeaway is simple: use AI for candidate rewrites and explanations, then rely on the database’s own metrics and plan tools to validate before rolling out. That balanced approach unlocks the value of AI SQL optimization without gambling on production.

Before touching a query, capture the data you’ll need to triage, test, and compare.

Document these in your ticket or PR. You’ll use them to judge whether any AI‑suggested rewrite actually helps.

Non‑SARGable predicates and implicit conversions

Correlated subqueries vs JOIN/aggregation

Over/under‑indexing

PostgreSQL

EXPLAIN (ANALYZE, BUFFERS) to compare estimated vs actual rows and block I/O.pg_stat_statements for regression monitoring; see Monitoring Database Activity.MySQL

EXPLAIN ANALYZE FORMAT=JSON for iterator timings and row counts.SET optimizer_trace='enabled=on'; then select from information_schema.OPTIMIZER_TRACE; see the Optimizer Trace documentation.SQL Server

SET STATISTICS IO, TIME ON for lightweight metrics; capture the actual plan (SSMS or STATISTICS XML).Oracle

DBMS_XPLAN.DISPLAY_CURSOR and Real‑Time SQL Monitoring.AI suggestions should pass the same tests you’d apply to any manual optimization.

This governance mindset keeps AI SQL optimization grounded and safe.

Here’s a repeatable workflow you can adapt across engines:

Disclosure: SQLFlash is our product.

Scenario: A PostgreSQL aggregation query against a 5‑million‑row events table times out during peak hours. You’ve collected EXPLAIN (ANALYZE, BUFFERS), schema, and sample parameters. The baseline shows a nested loop with a correlated subquery, high mis‑estimation on a filter, and significant shared buffer reads.

Approach: Feed the SQL text and plan snippet into SQLFlash to get candidate rewrites and index suggestions. The assistant proposes replacing the correlated subquery with a JOIN + grouped aggregation, and suggests a composite index on (user_id, event_time) to support the filter and ordering pattern. It also surfaces an implicit cast on event_time that blocks index use.

Validation: On staging data, run the candidate rewrite with EXPLAIN (ANALYZE, BUFFERS). Check operator‑level timings, actual vs estimated rows, and whether the composite index yields an Index Scan/Seek instead of a full scan. Confirm write‑path impact is acceptable by measuring insert/update latency on the events table. If metrics improve without regressions, proceed to a limited canary.

Notes: Keep the change journal and diffs in your PR. If you need background on MySQL and index design principles, see the MySQL performance tuning practice guide (SQLFlash site). For a broader survey of AI tools, the Top AI SQL optimization tools (2025) overview may help frame alternatives.

AI can accelerate the routine parts of SQL tuning—finding anti‑patterns, drafting rewrites, surfacing plan insights—but it shouldn’t replace the optimizer or your validation process. Treat AI suggestions as hypotheses, prove them with engine metrics, and roll out changes under governance.

Ready to try this workflow? Use an AI assistant to propose rewrites, then validate with your engine’s native tools before canarying changes.

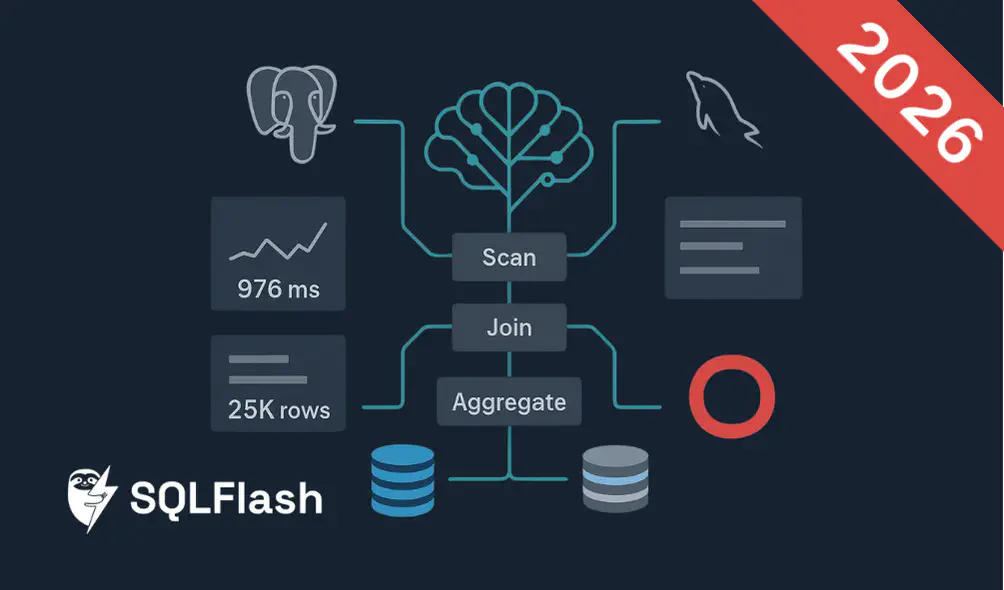

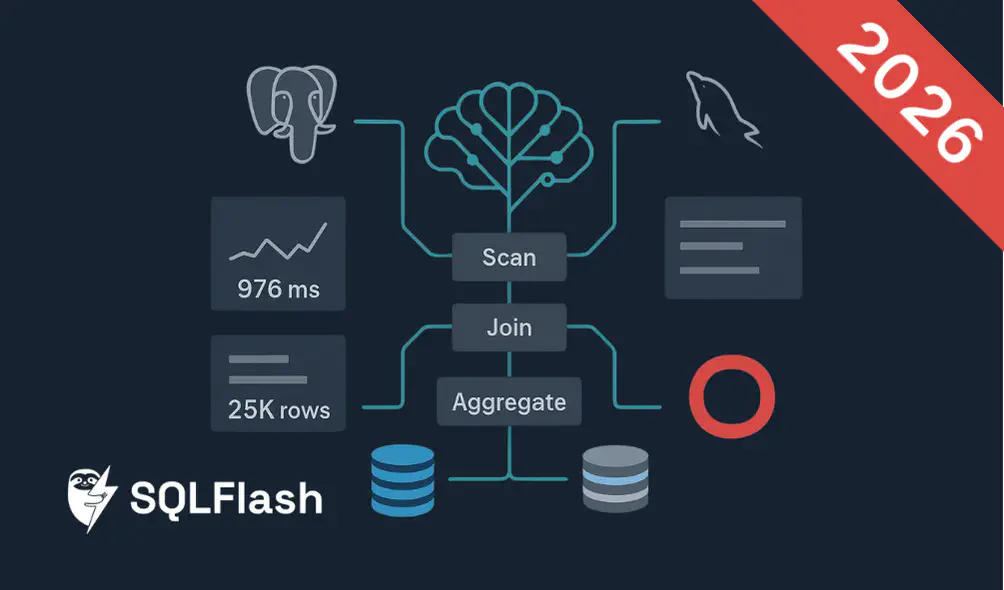

SQLFlash is your AI-powered SQL Optimization Partner.

Based on AI models, we accurately identify SQL performance bottlenecks and optimize query performance, freeing you from the cumbersome SQL tuning process so you can fully focus on developing and implementing business logic.

Join us and experience the power of SQLFlash today!.